Deep learning makes for shallow AI

And there's no alternative in sight, since 1982

With the hype telling us about how LLMs will change everything turning to 11, despite their lackluster achievements in the real world, it’s good to keep our heads cool.

Anyone who follows the news could be forgiven for feeling that the pace of innovation is dazzling. That soon, the many limitations we all have experienced will be a thing of the past, because look at how far we’ve come in such a short time!

What we shouldn’t underestimate is the combined creativity of the thousands of people who can be hired with billions of dollars in capital, who are highly motivated to find absolutely every problem that AI could be sold as a solution for, to create fantastic sales demos, and to make the best of fundamentally limited technology.

If you can throw almost infinite resources at a problem and nobody cares about CAPEX, energy, water use or copyrights, it would be damning indeed if that wouldn’t produce at least some impressive results.

So, what has been happening in AI?

Sadly, the main results are exactly about throwing resources at the problem and clever combinations of existing techniques.

But nowhere near a fundamental breakthrough of a problem that has existed in AI since, let’s say 1982, which is the year that Paul Werbos applied a mathematical technique called back propagation to train neural networks. And we’re still using that technique.

Sure, a lot has happened since then. We’ve learned to classify hand written digits using CNNs (LeCun, 1989), we’ve learned to look at long patterns such as speech using RNNs and translate text using LSTM (2003), how to train CNNs quickly using GPUs (2004), how to continue patterns in a training data set using GANs (2014) and how to steer that process using diffusion models (2015), leading to image generation services like Dall-E and Stable Diffusion (2022).

And then came “Attention is all you need” (2017), a paper from Google. Which wasn’t about their business model.

It described the Transformer architecture, a new neural network circuit intended to improve automatic language translation. A startup named OpenAI, which at the time wasn’t doublespeak, combined this with Generative Pretraining from 2012 and produced GPT-1, the Generative Pretrained Transformer.

It combined unsupervised pre-training on large amounts of text (reddit, 4chan, stolen books, you know, the usual) with a human-supervised “fine tuning” step to separate desirable from undesirable responses. When they tuned with human ranking of candidate responses for “convincing dialogue”, ChatGPT was born.

Much vibes. Very Eliza. Such wow.

Yeah, grampa. I know. You’re not talking about what’s happening now!

Scaling. Optimization (SSMs, quantization, mixtures of experts). Commodification.

And lipstick.

Yes, output sounds and looks ever more convincing, dialogue spaces grow bigger, you can continue your dialogue indefinitely now, as it’s saved between sessions, we added special tokens to speak API’ish and click on web pages (“Agentic!”) and we put multiple copies together that can talk to each other (“Multi-Agent LLMs!”).

Underneath it all is still something very much like that trusty transformer from 8 years ago.

So why is that a problem?

It isn’t, unless you expect more from it than it can ever deliver.

And we are expecting more than it can deliver. We’re buying tickets to the south pole, the moon, Jupiter and Alpha Centauri, just after the Wright Brothers mounted a propeller on a piston engine and put that on their flying contraption. And talk shows are full of people fretting over what on earth we should do with all those ships, trains and automobiles, which have become useless overnight.

Yes, that plane was a fantastic invention. You can optimize its engine for a 100 years or more. You can replace it with a turbojet. And that simple thing was a pathway to the Boeing 474 and the Airbus A380.

But 100 years on, aviation is still full of problems. It’s energy hungry and polluting. It’s definitely no replacement for all other modes of transport. We didn’t get flying cars. We didn’t reach the moon in an airplane. And there still isn’t a soul who knows how to reach the stars alive.

Why are you so pessimistic about AI?

Because that pig with the lipstick is called Deep Learning, and we haven’t been able to find an alternative since 1982.

(That was an insult to pigs. Not because of the lipstick, but because pigs are intelligent and sensitive).

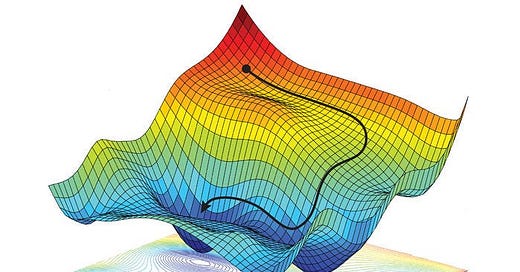

Deep learning makes for shallow intelligence, without a solution in sight. Here’s why.

To train any neural network using Deep Learning, regardless of whether it’s a CNN from 1989 or GPT-4.1 from 2025, you need all training data to be accessible to the training program.

Even for the slightest improvement. You can’t calculate in which direction any of the parameters in the network should be adjusted without a new and representative sample from all data in its training data set.

And that little detail has debilitating consequences. It means that with any neural network that is big enough to require training using Deep Learning from Big Data, you can’t do on-line learning. Yes, you can gather data during use and include that when training the next release, but that’s it.

The model can’t adapt. It can’t deduce. It can’t learn from mistakes.

It’s an intuitive fundamentalist: intuitive because there’s no reasoning, and fundamentalist, because the holy book of training data is perfect and no amount of talking will ever convince it otherwise.

The ability to reflect on the results of your own actions and to adapt your strategy to changing circumstances is a litmus test for intelligence. All deep learning based AI fails this test and will fail for the foreseeable future.

How fundamental is that problem?

If we ever get sufficiently powerful hardware to put all the data that GPT-4.1 was trained on in the palm of your hand (which would require all those gigawatt-consuming data centers full of GPUs to be shrunk, ahem, considerably), the live trained model would still only adjust slowly to large collections of samples.

Training using backpropagation and SGD still wouldn’t deduct, still wouldn’t come to conclusions from single examples that ripple through everything it knows. It still would only mind the law of large numbers.

No large numbers of examples, no gradient; no gradient, no model improvement.

That’s also the reason why even Reinforcement Learning, which produces models that play games or control rockets, can’t learn from single experiments in the real world. It needs simulators and endless attempts to work, because every situation needs to repeat a gazillion times. Very useful for physics, and for finite games with fixed rules, not so easy for less regular, less easily simulated environments like traffic.

And it’s also the reason that chat services lie. The 'I apologize, I made a mistake, thank you for pointing out X, the real answer should be Y' is just the most plausible continuation of a dialogue where a human is corrected. The model didn't learn anything, the model is completely unaffected.

That’s why deep learning produces shallow AI.

And that is pretty fundamental. Sure, you can bolt website browsing capabilities to the LLM, you can let groups of LLMs exchange their confident bullshit with each other and create an illusion of a reasoning, because you can see some intermediate steps. But that’s indeed an illusion, since there is no deduction happening in a logical sense.

It’s just a collection of chat bots spewing the most likely continuation of a previously held dialogue at each other, devoid of meaning.

Aren’t we doing the same?

The more you use ChatGPT, the more you will.

You have been warned.